Last week I re-mixed some older works – Q is for Climate (?) and The Whole Earth Chanting – into an 8 channel sound installation for Unfold X 2024, The Seoul Foundation of Arts and Culture curated by Sohyeon Park.

The new work is called Qlimate Tongues and will be shown in ‘Cultural Station Seoul 284’ (Old Seoul Train Station) 7 Nov – 30 Nov 2024.

This will be my first sound installation and combines AI generated human and non-human chanting with quantum computing sound composition.

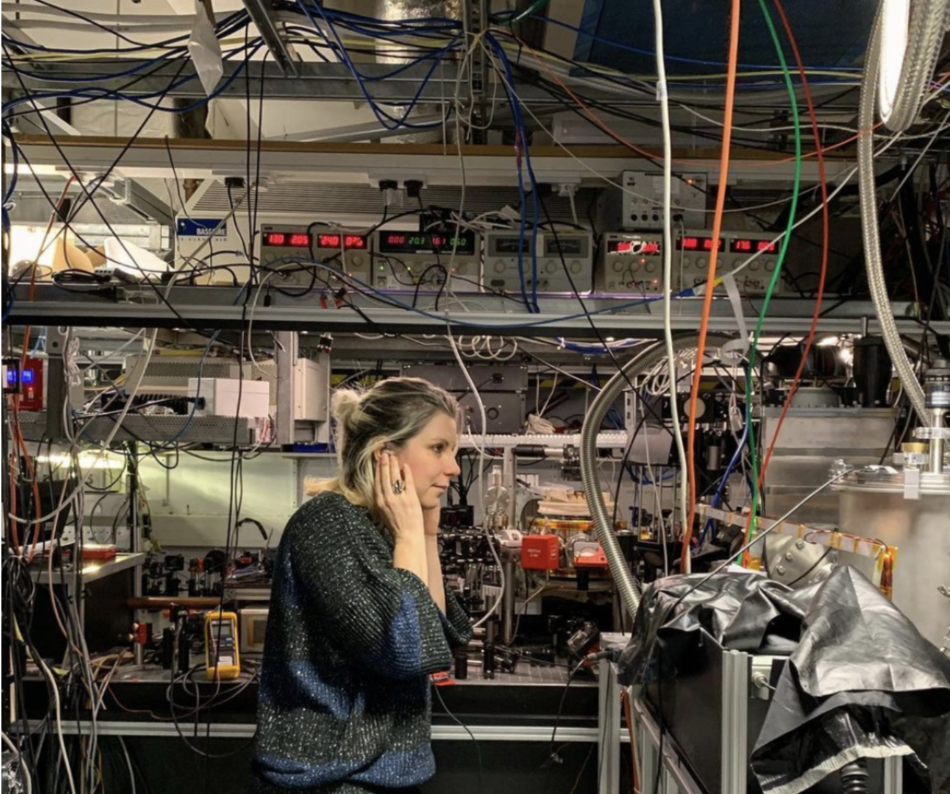

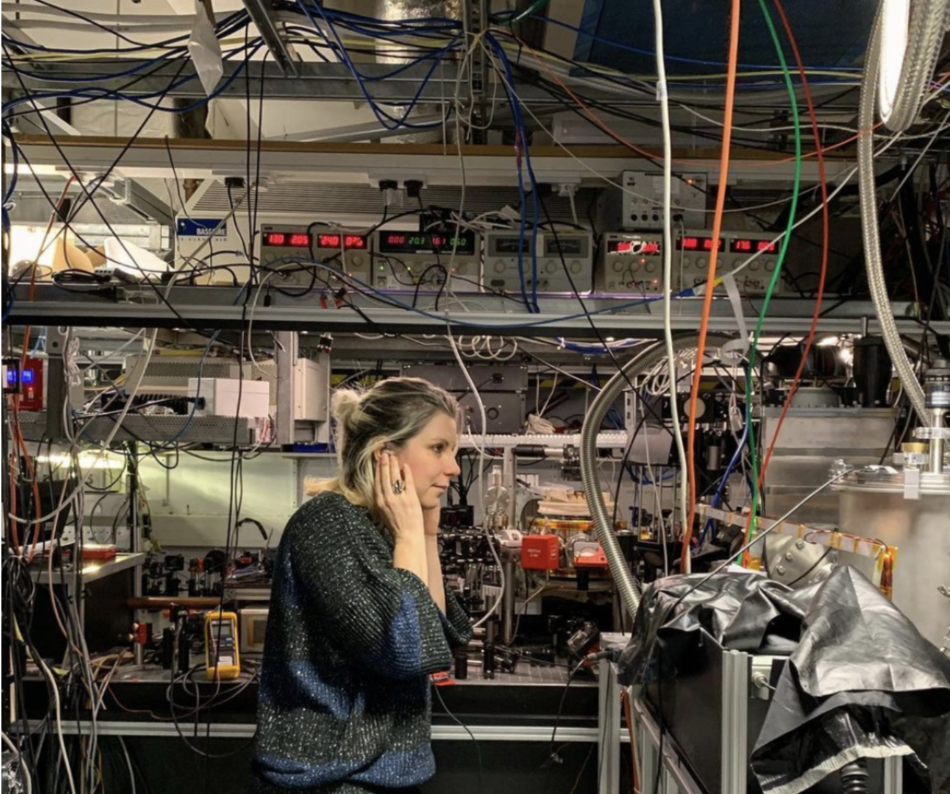

In 2019, I trained a waveGAN AI model on recordings I made of human and non-human chanting – religious chants, football chants, bird calls, water lapping and the periodic rumblings of inanimate objects including equipment from my friend’s quantum lab at Imperial College London.

I was interested in the notion of hybridity – the moments within the latent space of the GAN where voices blurred together, entangling human and non-humans (what new bodies and temporalities do these new utterances suggest?).

Now I’m reworking these AI generated human and non-human chants with my self-coded quantum sequencer, which generatively layers together the AI clips following the wave-like patterns of quantum superposition and entanglement. This patterning reminds the audiences that reality at its most fundamental is unstable and shape shifting, with potential for change.

I’ve really enjoyed making the work as sound is so fluid, ephemeral and emotional. It really occupies a space – it caresses our skin and rattles our bones.

I love how Qlimate Tongues is a weird combination of mechanic-organic-human, and dare I say it, otherworldly.

I’m excited about how the sound will flow between 8 speakers following the invisible wave-like patterns of quantum entanglement that I extracted from one of IBM’s publicly available quantum computers.

Audiences will feel and hear a pulsating quantum composed soundscape – continually shifting, (de)cohering with rising and falling human and non-human chants, inviting people to imagine a more spiritual, nuanced quantum-based understanding of ourselves & our environment.